How to avoid Overfitting & Underfiting in Machine Learning

In the previous post, we’ve learned two common problems from machine learning models, which are “Overfitting” and “Underfitting” when validating them.

In addition, we’ve learned what are the relationships of the overfitted & underfitted models with the concepts of “Bias” and “Variance”.

If you are not sure about these topics,

check here: Overfitting and Underfitting in terms of Model Validation and

check here: Bias and Variance

In this post, we will learn:

- Three solutions to avoid overfitting in terms of training process, model selection and feature engineering

- Two solutions to avoid underfitting in terms of training process and model selection

The goal of selecting the machine learning model is:

- Goal: Finding the best model with low bias & low variance and showing an optimal fitting (neither overfitting nor underfitting)

How to avoid Overfitting

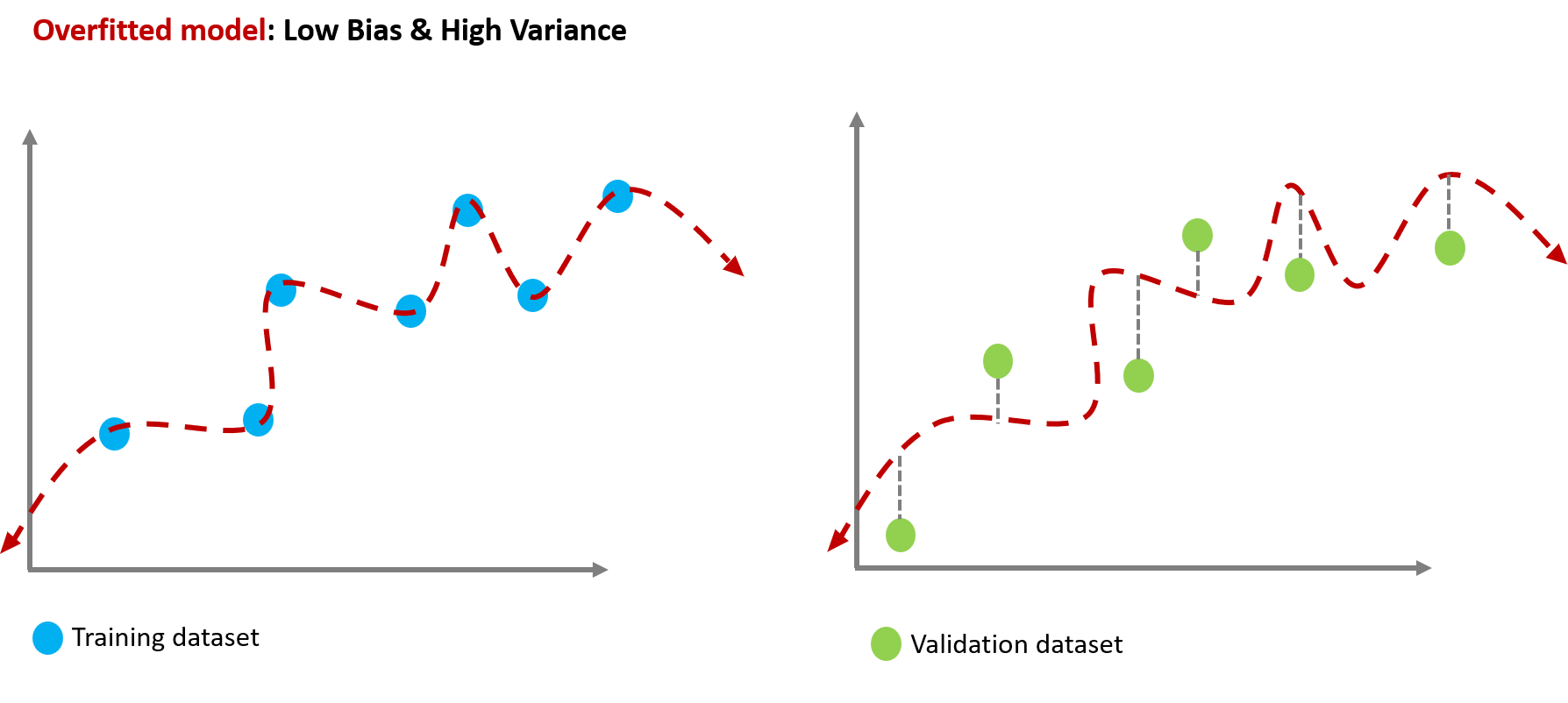

An overfitted model has low bias & high variance as below:

Possible reasons of overfitting and their solutions are as follows:

In terms of training process,

Reason 1. The model memorized all the training dataset including its noises.

Solution 1.

Early stopping:

Early stopping refers to stopping the training process earlier so that the model does not learn all the details such as noises of the training dataset.

In terms of model selection,

Reason 2. The model is too complex.

Solution 2.

Regularization:

Regularization refers to a variety of techniques to make the model to be simpler.

L1 and L2 regularization are one of the methods that add a penalty parameter for a regression. This topic will be dealt with in detail in a next post.

In terms of feature engineering,

Reason 3. The model uses too many ML features.

Solution 3.

Remove features:

Removing non-essential features can help the model find the true patterns of the task.

How to avoid Underfitting

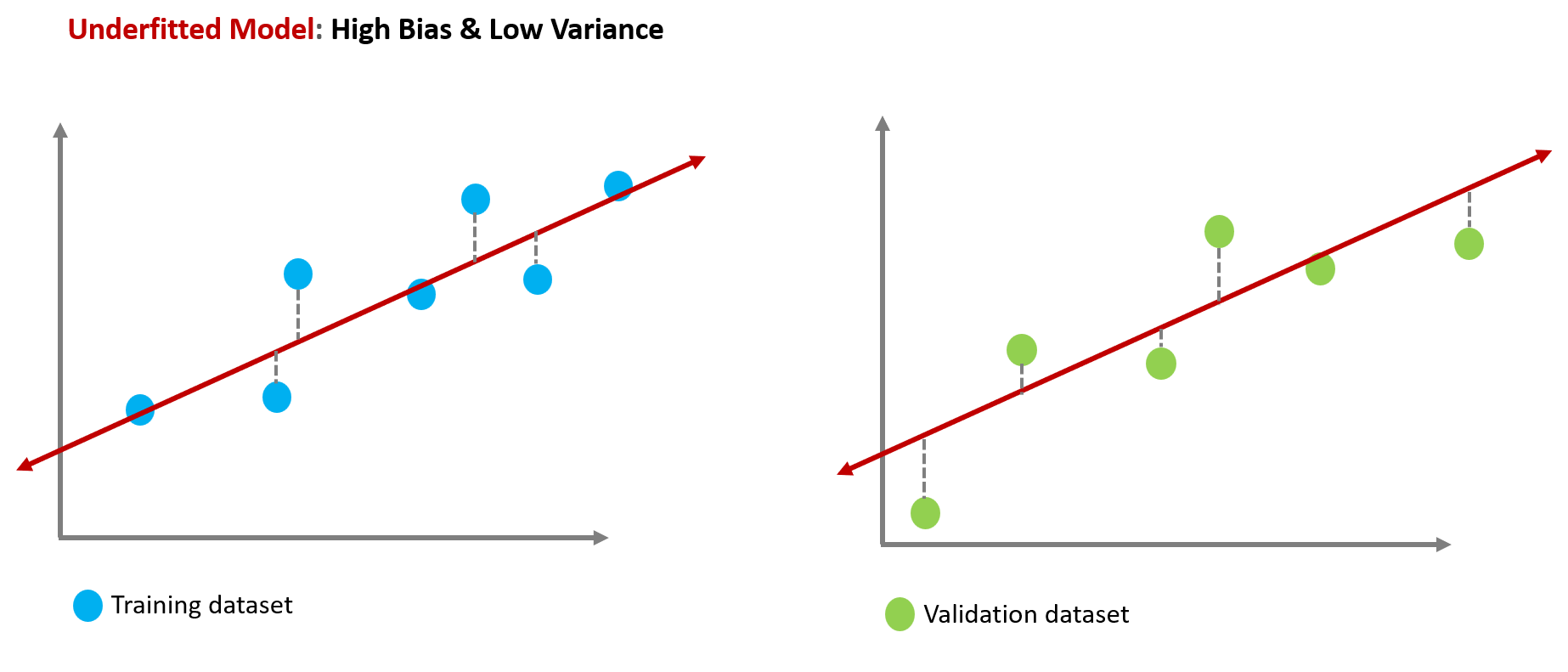

An overfitted model has high bias & low variance as below:

Possible reasons of underfitting and their solutions are as follows:

Reason 1. The model could not learn enough the patterns of the task.

Solution 1.

More time for training:

Maybe this time, too early stopping.

Increasing the number of epochs or the duration of training can avoid the model to be underfitted.

In terms of model selection,

Reason 2. The model is too simple.

Solution 2.

A more complex model:

We can try to replace the linear model with a higher-order polynomial model.

It will help us solve the problem of underfitting.

In the next post, we will learn:

- What is L1 and L2 Regulation

- How do they make the better ML model

References

- Reference1: Overfitting and underfitting in machine learning

- Reference2: What is Overfitting in Computer Vision? How to Detect and Avoid it