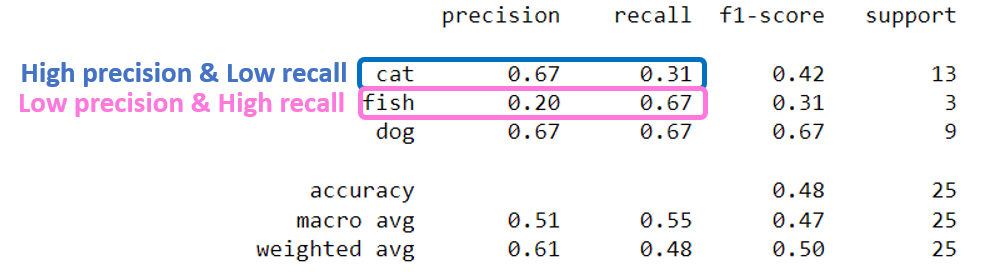

High precision & Low recall vs.

Low precision & High recall

We’ve learned how to calculate the macro/weighted average of precision, recall and f1-score for a trained model.

If you are not sure how to do it,

check here: Macro vs. Weighted Average in a Classification Report of scikit-learn

In this post, we will learn how to assess the model performance in terms of precision and recall.

At the end of the article, we can answer to the questions:

- How we assess a model output with high precision & low recall?

- How we assess a model output with low precision & high recall?

There are four possibilities for a performance of a model in terms of precision and recall:

- “high precision & high recall”

- “high precision & low recall”

- “low precision & high recall”

- “low precision & low recall”

High precision & High recall

This is the best case that we can get from our model.

High precision & high recall means that the the model is returning accurate results (high precision) and returning a majority of all positive results (high recall).

Let’s say that the goal of our model is to recognize cat images correctly.

As for our model, the output with high precision & high recall means that the model only recognized cat images correctly from other images.

- High precision: a low FP (False Positive) rate

-

High recall: a low FN (False Negative) rate

- FP for our model: The model predicted an image as a cat (positive) but it was actually a dog (negative).

- FN for our model: The model predicted an image as a fish (negative) but it was actually a cat (positive).

If we see how to calculate the precision & recall, we can understand the meaning of a low FP for high precision and a low FN for high recall:

Not sure what are FP & FN and how to calculate Precision & Recall,

check here: Evaluation of a Model for Classification Problem (Part 1)

High precision & Low recall

A model with high precision & low recall is returning very few results, but most of its predicted labels are correct.

As for our model, it casts a very small but highly specialised net, does not catch a lot of cat, but there is almost only cat in the net:

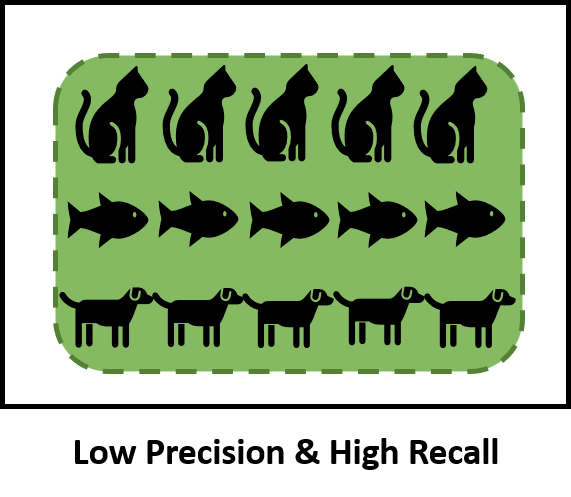

Low precision & High recall

A model with low precision & high recall returns many results, but most of its predicted labels are incorrect.

As for our model, it casts a very wide net, catches a lot of cats, but also a lot of other images.

Low precision & Low recall

This is the least wanted case that we can get from our model.

As for our model, it failed to recognize cat images only and its coverage is also low.

Recap

We’ve learned how to assess the performance of a model in terms of precision & recall.

The model’s output with high precision & low recall means it predicted the instances for a class quite well, but its coverage is not that high.

The model’s output with low precision & high recall means its coverage for a class is quite high, but it includes lots of other instances from other classes.

References

- Reference1: Precision-Recall

- Reference2: Assessing model performance in secrets detection: accuracy, precision & recall explained

- Reference3: Explaining precision and recall